Your shopping cart is empty

Buy Online or Call 800-237-0402

Search

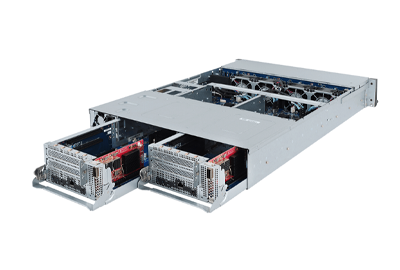

The Gigabyte S260-NF1 Storage Server has a unique design that incorporates up to three NVMe Over Fabric (NVMeOF) controllers through two modules, each with a CPBD530 board-based controller node that can access all 24x front-mounted dual channel NVMe drives. With up to 3x HBA adapter cards in each module, the system enables NVMe-based communications over fabrics to provide scaled flash storage independent of compute resources that can be accessed anywhere in the data center.

Utilizing remote direct memory access (RDMA) either directly or indirectly through a switch for superfast data transfers, the Gigabyte S260-NF1 Storage Server features a dual controller architecture with 24x 2.5-inch NVMe hot-swappable NVMe SSD bays. It supports up to three Western Digital Onyx NVMeOF (NVMe Over Fabric) adapter cards in each of the two CPBD530 board controller modules, which are in turn routed through PCIe switches to the front-mounted dual channel NVMe drives. This allows flash storage to be scaled independent of compute, while still using NVMe as the fundamental communication mechanism. Instead of just a bunch of disks (JBoD) this configuration enables just a bunch of Flash offering speed, efficiency, and the ability to disaggregate resources to minimize underutilization. Furthermore, to provide fast access for Remote Direct Memory Access (RDMA) this system offers either 1x 10Gb or 2x 50Gb ethernet ports via a QSFP28 interface.

Instead of a CPU, this system features dual Western Digital Onyx NVMe Brige ASICs. The system can support up to 3x Western Digital Onyx NVMe Host Bus Adapters in each CPBD530 board enabling NVMe flash storage to be shared across a network of servers. The system is compatible with RDMA over Converged Ethernet (RoCE) v1 and v2, plus iWARP, which enables remote direct memory access over Internet protocol networks.

Memory is not part of this system architecture.

24x front mounted NVMe hot-swappable SSD drive bays are available on the front of the 2U chassis. All drives are accessible by the 2x controllers mounted to each of the controller boards each of which has a PCIe switch with 96 lanes. The dual channel SSDs supported on this system have the ability to be connected to 2x bays at a time allowing for critical redundancy and failover. Instead of a PCIe 3.0 x4 connection to each of the supported drives, the channel is split into two channels of PCIe 3.0 x2.

Each of the control boards have 3x PCIe 3.0 x16 slots for 6x PCIe slots total with both control boards. PCIe switch with 96 PCIe 3.0 lanes provides a x48 connection to the installed NVMe storage devices and in turn to the Western Digital Onyx NVMe host bus adapter cards on each board enabling RDMA access of supported flash-based data drives. Up to 3x Western Digital Onyx NVMeOF Adapter cards can be installed on each of the 2x controller nodes providing up to 2.5M random reads and 2M random write IOPS per NVMeOF adapter card. For up to 16M IOPS with 6x adapter cards installed.

System management is through an integrated mLAN port on each of the controller boards providing access to the ASPEED AST 2520 baseboard management controller (BMC).

Designed for speed and efficiency the Gigabyte S260-NF1 Storage Server delivers up to 24x NVMe storage devices across a network through an RDMA protocol. Using NVMe as the fundamental communication mechanism, external storage enclosures can connect directly or indirectly to a server enabling better resource utilization across the network.

If you know what you want but can't find the exact configuration you're looking for, have one of our knowledgeable sales staff contact you. Give us a list of the components you would like to incorporate into the system, and the quantities, if more than one. We will get back to you immediately with an official quote.