NVIDIA H200 Tensor Core GPU - System Overview

Additional Information

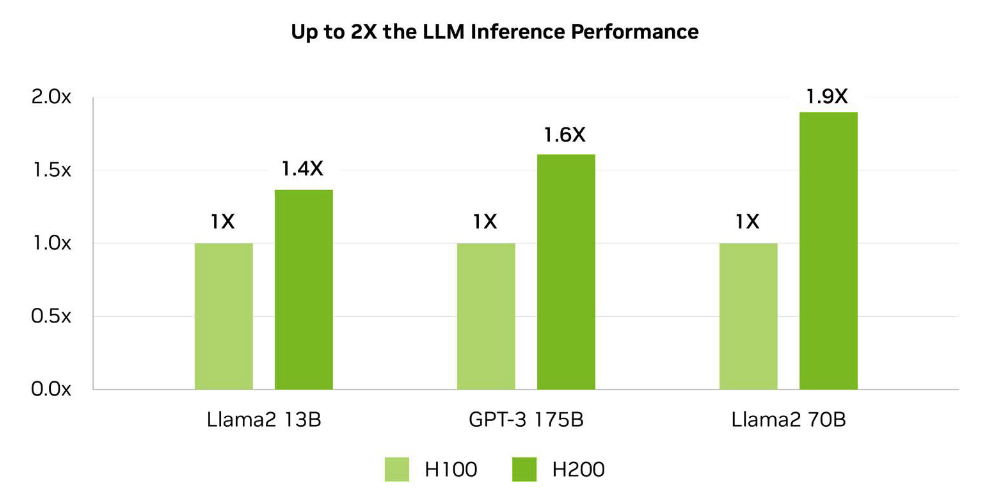

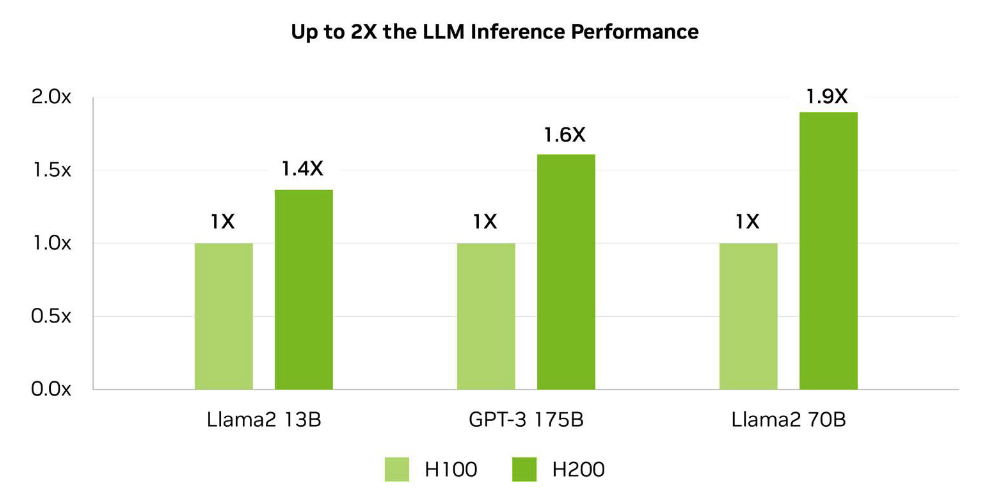

NVIDIA’s H200 Tensor Core GPU features an SXM form factor while the NVIDIA H200 NVL (NVIDIA Low-Profile) is a PCIe-based card with a lower profile for space constrained environments. Both offer the same performance levels across the board. Crucial for high-performance computing, the memory bandwidth on the NVIDIA H200 Tensor Core GPU allows for faster data transfers when compared to the H100. Greater memory bandwidth ensures faster data access and the ability to evaluate and manipulate that data for significantly faster results. It’s up to 110X faster for high-performance computing tasks when compared to the Ampere-based A100! For GPT-3 175B Inference, a state-of -the-art language model featuring 175 billion parameters, it is 1.6X faster.

Performance and Memory

It offers the most memory to date at 141GB of HBM3e memory to deliver 4 petaFLOPS of Floating Point 8 (FP8) performance. FP8 precision is less memory-intensive than FP32 and FP64 used by other high-performance GPUS from NVIDIA. Jointly developed by NVIDIA and ARM, FP8 allows for up to 4X higher inference performance by improving memory efficiency. FP8 is only available with NVIDIA’s latest GPU architecture for Ada Lovelace and Hopper. The NVIDIA H200 NVL also comes with a 5-year NVIDIA AI Enterprise subscription to help develop and deploy production-ready generative AI solutions, such as speech AI, computer vision, retrieval augmented generation (RAG), and others.

Both the SXM form factor and PCIe-based GPU support Multi-Instance GPU or MIG, allowing the resources of each GPU to be divided up to 7 instances, each with 18GB of memory. All performance indicators for both form factors are nearly identical except for thermal output and interconnect. The interconnect for the PCIe card is via 2-way or 4-way NVIDIA NVLink bridge, whereas the SXM version is supported on an NVLink board with 4 or 8 GPUs.

Cooling and Power

Offering dramatically increased performance, the NVIDIA H200 delivers the same energy efficiency as the H100 reducing TCO by up to 50% on a performance to cost ratio. The PCIe-based NVIDIA H100 NVP features passive cooling with air flow provided by the host system. The SXM version is also cooled by the host system, which pushes air over the towering heat sinks on each GPU. Maximum Thermal Design Power (TDP) is listed at 700W (configurable) for the SXM form factor and up to 600W (configurable) for the PCIe-based version.

Check Availability