NVIDIA Tesla P100 SXM2 GPU - System Overview

Description

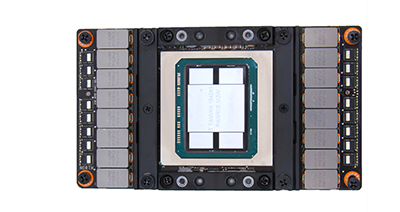

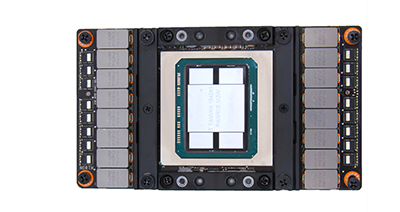

This GPU is stepping outside the traditional PCI Express design and moving on to the SXM2 form factor to reach its full potential. The GP100 graphics processor was built to be the highest performing parallel computing processor to address the needs of the GPU accelerated computing markets. The result of the P100’s more efficient manufacturing process, architecture upgrades, and HBM2 memory is a big boost in performance over the Maxwell-based GPUs. The Tesla P100 features NVIDIA NVLink technology enabling superior scaling performance for HPC and hyperscale applications.

Performance

NVIDIA Tesla P100 SXM2 GPU taps into NVIDIA Pascal GPU architecture to deliver a unified platform for accelerating both HPC and AI with dramatically increased throughput, while also reducing costs. Tesla’s P100 was designed to deliver exceptional performance for the most challenging compute applications delivering 5.3 TFLOPS of double precision performance (FP64), 10.6 TFLOPS of single precision performance (FP32) performance, and 21.2 TFLOPS of half-precision performance (FP16). The 15.3 billion transistors on the GPU support 3584 shading units, 224 texture mapping units, and 96 ROPs. Up to 8x Tesla P100 GPUs cam be interconnected delivering 5x the bandwidth of a PCIe-based infrastructure.

Memory

Memory bandwidth more than doubles over the Titan X to 732GB/s thanks to a wider memory bus. The Tesla P100 SXM2 graphics card also increases memory capacity to 16GB of HBM2 memory with error-correcting code (ECC) and Chip-on-Wafer-on-Substrate technology. By adding these features, the GPU is able to provide three times more memory performance over the NVIDIA Maxwell architecture. The 16GB CoWoS HBM2 memory runs at 715 MHz and is connected to a 4096-bit memory interface with a 732GB/s bandwidth. The GPU also runs at a frequency of 1328MHz, which can be increased to 1480MHz.

Features

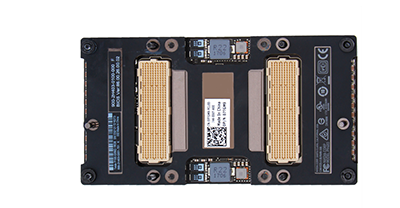

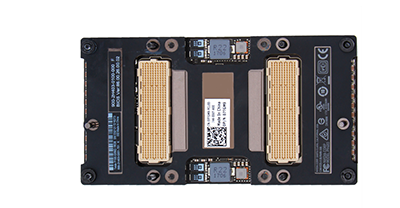

The NVIDIA Tesla P100 SXM2 GPU supports the revolutionary NVIDIA NVLink high-speed bidirectional interconnect. This feature is designed to scale applications across multiple GPUs with lightning-fast nodes to substantially accelerate performance. A server node with NVLink can interconnect up to eight Tesla P100s at 5X the bandwidth of PCIe. Completing workloads with fewer nodes means that users can save up to 70% in overall data center costs. The Tesla P100 board design provides NVLink and PCIe connectivity. One or more NVIDIA P100 SXM2 GPU accelerators can be used in workstations, servers, and large-scale computing systems. The GPU requires a 300W power supply and does not have any display connectors as the SXM2 is connected to the system using the NVIDIA NVLink board for a direct connection to the CPU.

Summary

NVIDIA’s Tesla P100 SXM2 GPU accelerator built with Pascal architecture brings together breakthroughs that will allow users to solve problems that were previously considered impossible to solve. The 16GB CoWoS HBM2 memory and increased bandwidth make the P100 graphics card one of the most efficient cards for AI, HPC, and deep learning. Compared to the previous generations, the GPU has better overall performance for memory capacity, connectivity, power efficiency, and more.

Check Availability