Description

Designed to meet the needs of many modern computing systems, the NVIDIA Tesla V100 16GB GPU is for implementations using the PCIe slots, the most commonly used form factor. The PCIe version also operates at a lower thermal design power point when compared to the SXM2 version, which uses NVLink for direct communication with the CPU. Both the NVIDIA Tesla V100 16GB GPU and the NVIDIA Tesla V100 32GB GPU are ideal for deep learning, quantum chemistry, finance, weather, and more.

Performance

Volta-powered with 21.1 billion transistors on a 12nm process, the NVIDIA Tesla V100 GPU supports many features to easily plow through complex workloads. Featuring 5120 shading units, 320 texture mapping units, and 128 ROPs, it also includes 640 tensor cores, which help improve machine learning application speed. This graphics processing unit is the engine of the modern data center, delivering breakthrough performance with 250 watts of power coming in through a single 8-pin power connector. With the raw computational power this card provides, users can get by with fewer servers, resulting in less power consumption for total cost savings.

Memory

The NVIDIA V100 16GB is connected to the PCIe bus using a 4096-bit memory interface. Outfitted with 16GB, the maximum memory clock speed runs at 877 MHz with a peak memory bandwidth of up to 900GB/s. The Tesla V100 PCIe boards are enabled with error correcting code (ECC) to protect the GPU’s memory interface and the on-board memories. Until the data transfer is error-free, the GPU will retry any memory transaction that has an ECC error.

Features

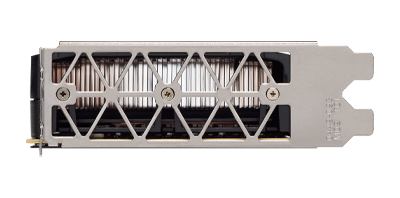

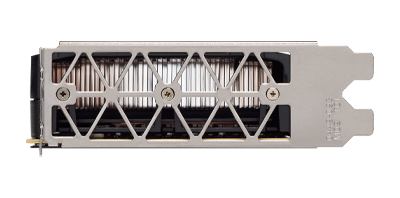

The Tesla V100 PCIe accelerator uses a passive bidirectional heat sink for cooling, which lets air flow from left to right, or vice versa. It also supports double precision (FP64), single precision (FP32), half precision (FP16), unified virtual memory, and a migration engine. In addition, features like Maximum Performance (Max-P) and Maximum Efficiency (Max-Q) modes allow for efficient power consumption options. To accelerate applications that need the fastest computational speed and maximum data throughput, Max-P mode can operate unrestrained up to its TDP level of 250W. With Max-Q mode, administrators can change the power usage to operate with optimal performance per watt. A power limit can also be set via software across all GPUs in a rack. Max-Q is not tied to a specific power number.

Summary

The double-wide NVIDIA Tesla V100 GPU is ideal for deep learning, science, engineering, and more. 16GB of HBM2 memory makes it easy to complete projects in a rapid and cost-effective way. Integrated security, ease-of-use, and flexibility make this GPU ideal for modern high-performance data centers.